|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

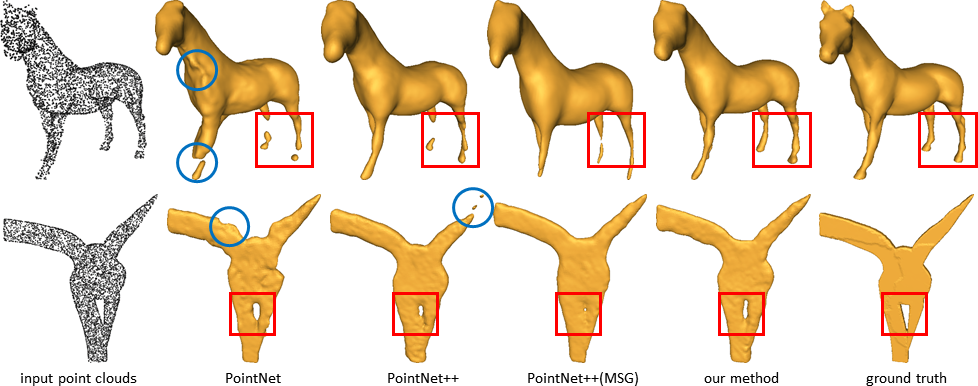

Architecture of PU-Net. |

|

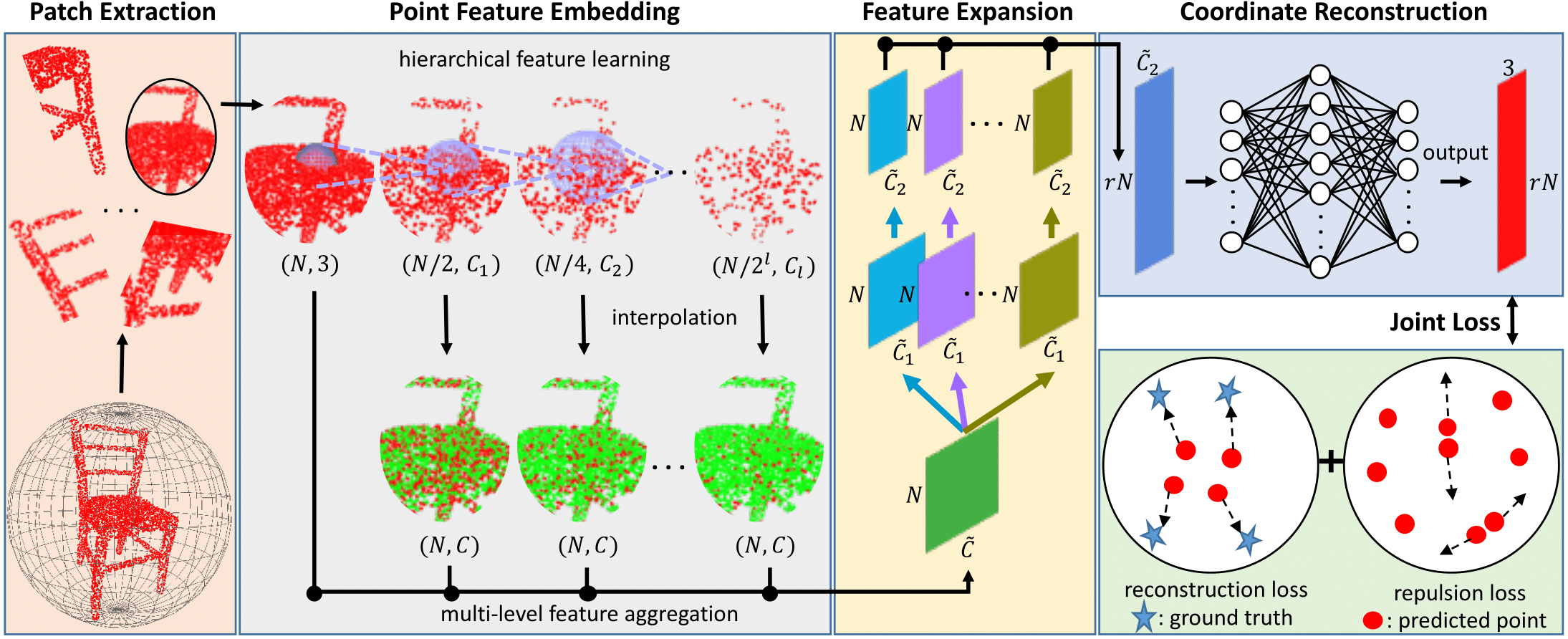

Architecture of Feature Expansion. |

|

Lequan Yu, Xianzhi Li, Chi-Wing Fu, Daniel Cohen-Or, Pheng-Ann Heng. EC-Net: an Edge-aware Point set Consolidation Network. In CVPR, 2018. [Paper] [supp] [poster] |

|

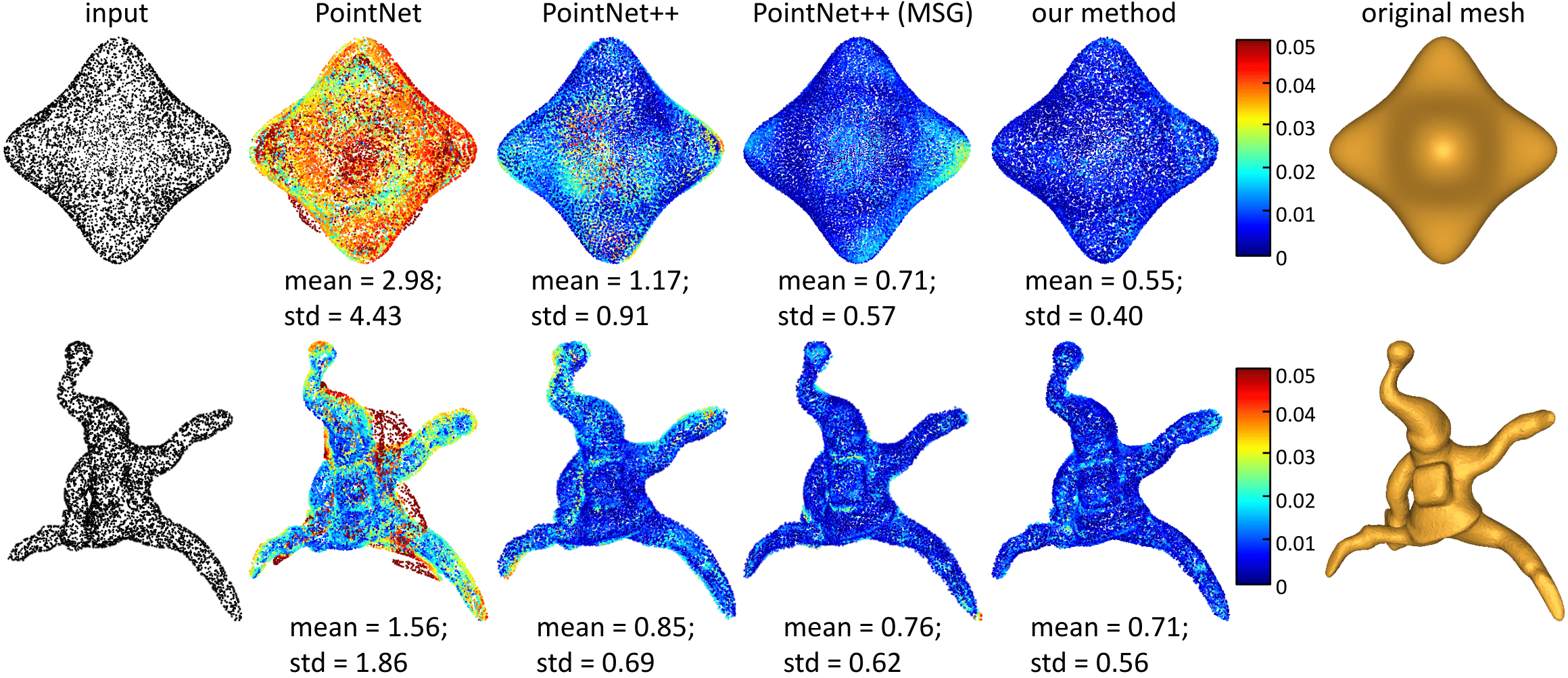

Visual comparison of deviation. The colors on points reveal the surface distance errors. |

|

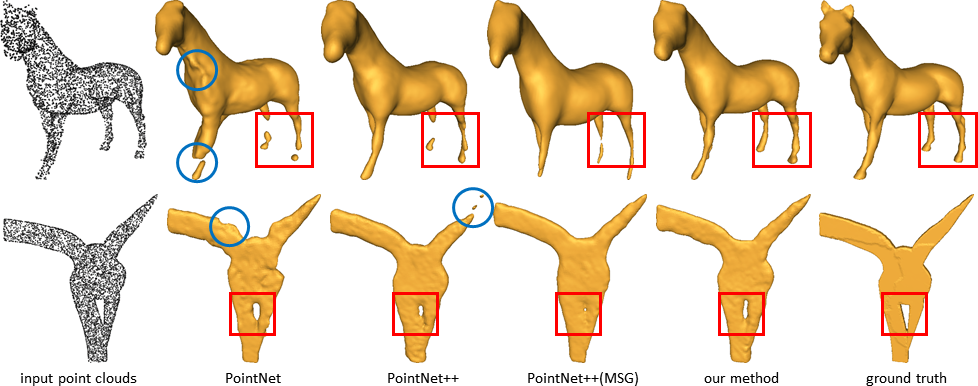

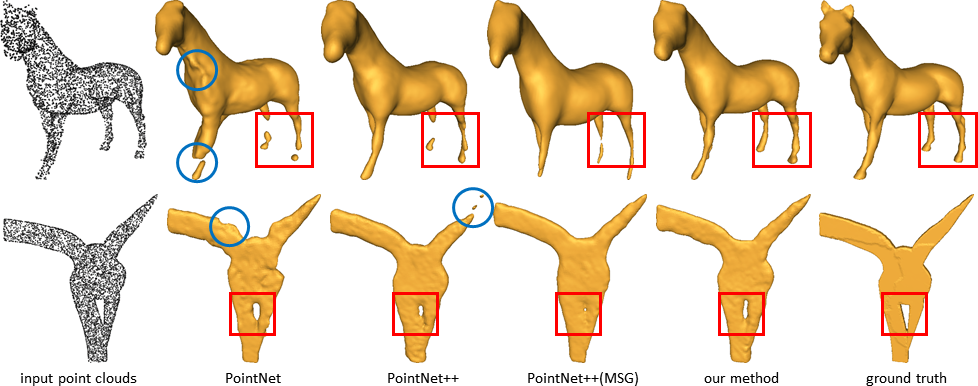

Surface reconstruction results from the upsampled point clouds. |

|

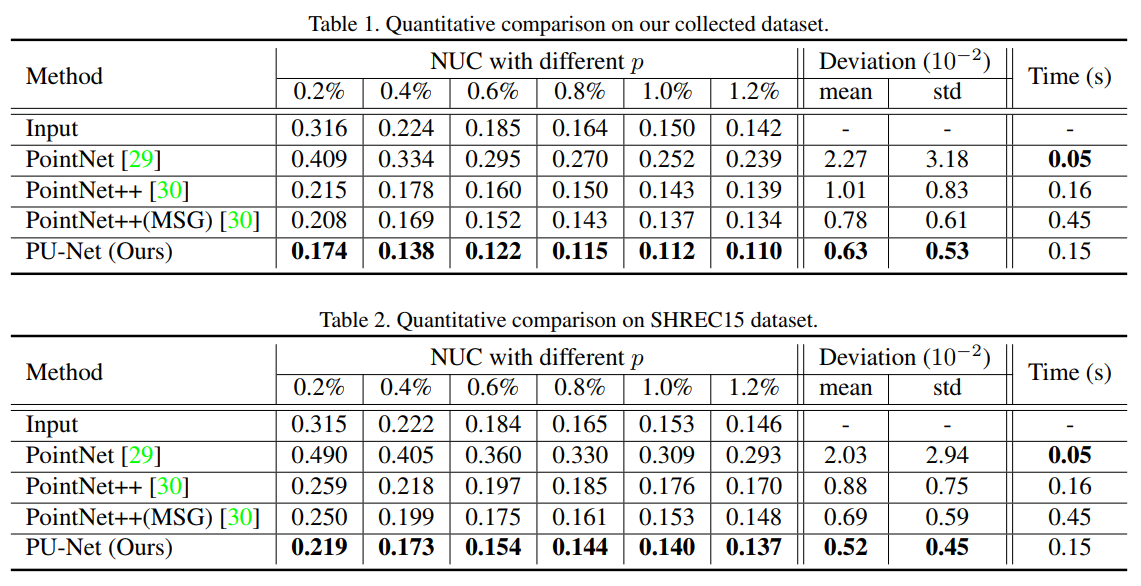

Quantitative comparison on testing dataset. |

|

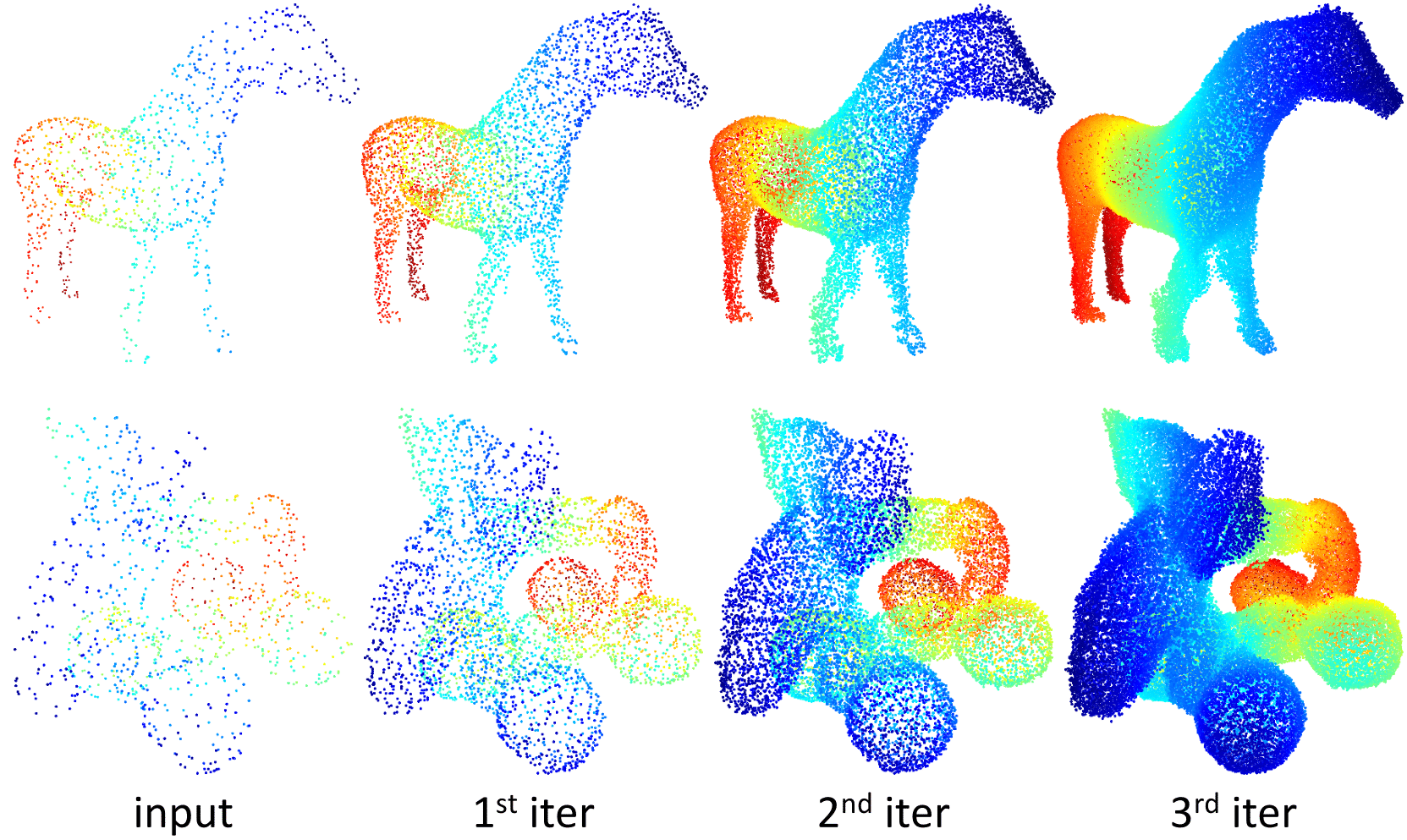

Results of iterative upsampling. |

|

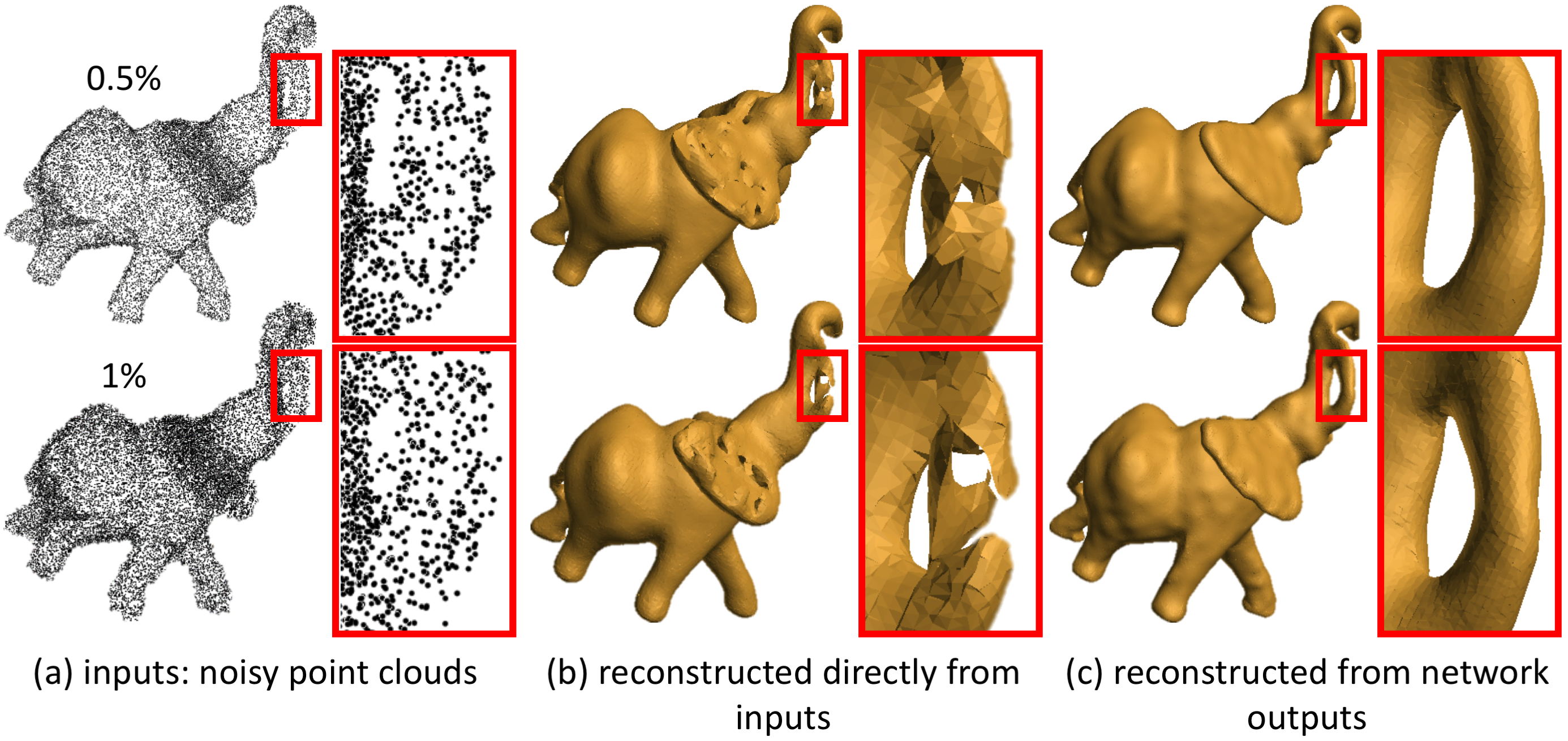

Surface reconstruction results from noisy input points. |

|

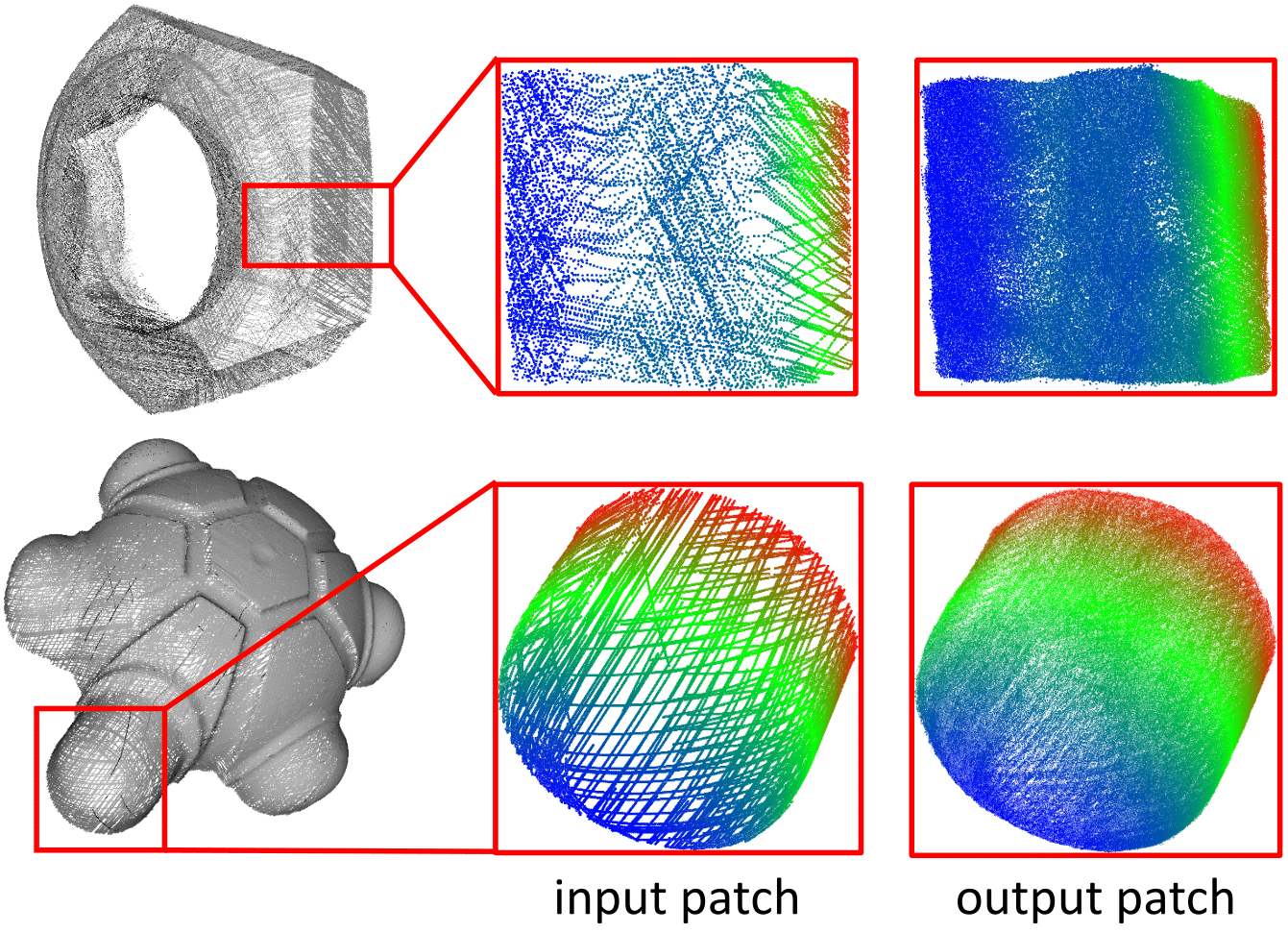

Results on real-scanned point clouds. |

Acknowledgements |